Mobile mapping technology has been around for a few years now; nevertheless, it is still a relatively new and unfamiliar technology for many surveyors. Whilst early adopters only see benefits such as ease of use, speed of deployment and data capture, and the richness of the data that it provides, many traditional companies still have questions regarding the quality and precision of the data. Certainly every system, terrestrial or mobile, has specific applications which it is most suited. So what happens when you work on a project, which stretches across multiple survey applications, e.g. city mapping and indoor mapping? And how do you decide which technology to use without compromising on the quality of the delivered data?

Based on many years of experience with terrestrial and mobile scanning, here is my simple answer: There is no need to compromise. You use the most suited sensor (terrestrial or mobile) to capture the data and then use a robust registration software to merge the point clouds, producing the best data set for your customer.

At HxGN LIVE 2017 in Las Vegas, I assisted our reality capture product manager, Mike Harvey, during his hands on workshop “Combining point clouds from multiple sources”. In this blog post I will present the most important lessons, while also answering the questions above.

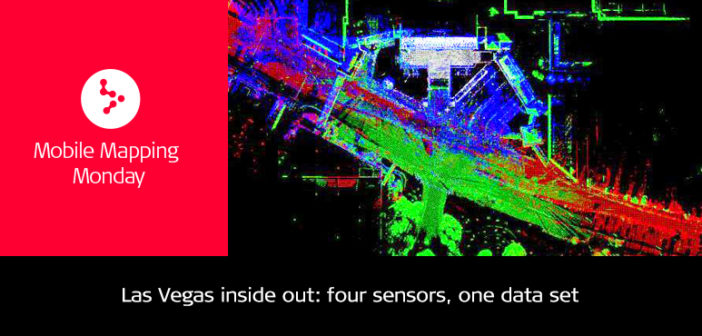

Point clouds from P40 (green), BLK360 (grey), Pegasus:Backpack (blue) and Pegasus:Two (red)

First, have a look at the video below, which gives a good overview of the collected data. The data was captured with the following four sensors the day before the workshop, it took 15 minutes to import and 20 minutes to register:

- Leica Pegasus:Backpack Wearable Mobile Mapping System (blue point cloud)

- Leica Pegasus:Two Vehicle-based Mobile Mapping System (red point cloud)

- Leica P40 High Definition Laser Scanner (green point cloud)

- Leica BLK360 Imaging Laser Scanner (grey point cloud)

1. How to register the point clouds

“The raw data was fused without using targets, total stations, or GPS equipment. The areas were simply overlapped by roughly 20% in order to merge the data neatly. Leica Cyclone Register was used due to its robust, fast, and versatile point cloud registration,” said Mike Harvey. Whether it be combining point cloud data for smaller or larger widespread projects, the software has the tools to produce repeatable and accurate results.

2. Fusing BLK360 with P40 data

First, all data sets needed to be imported into a Cyclone database. The software has direct import for all Leica Geosystems reality capture sensors, which means you simply have to select which sensor data to import. After importing the BLK360 and P40 data into Cyclone, the setups/stations are visualised as regular scan worlds that can be registered with any of the Cyclone Registration workflows. For this project, we selected Visual Alignment for BLK360 data, which is the easiest function for users that are new to Cyclone, and AutoAlign for the P40 data which is also easy and saves time. Once the data was imported, we used Visual Alignment once again to align one of three BLK360 scan to just one of three P40 scan. The other four scans fell directly into place due to Cyclone Register’s ‘Grouping’ function.

3. Combining P40 with mobile mapping data sets

Since mobile data is already geo-referenced, we merged the registered point cloud from the P40 with Pegasus:Two data using cloud-to-cloud registration. For those not familiar with this type of registration, it is a technique where three to four common points are selected in each scan, e.g. corners of buildings or corners of windows ( seethe below figure), to merge the data. The Pegasus:Backpack data was registered to the Pegasus:Two data in same manner.

4. Finalise the registration as usual

Onwards from here, the usual registration steps were followed:

- review diagnostics

- freeze

- create ModelSpace

In less than three hours, we were able to import data from multiple sensors, register them using different techniques, and teach our class, which had a good mix of experts and beginners attending, how to merge data resulting in a combined geo-referenced database.

Now watch the video again and enjoy the beauty of Las Vegas captured with 4 different sensors, each most suited to a specific application. For further details on the data sets used and the differences in sensors, follow this fusing data link. We look forward to welcoming you at HxGN LIVE 2018 for more training and education.

Until then… keep reading our Mobile Mapping Monday blog post series for regular updates

Frank Collazo,

Global AE, Geospatial Solutions Division,

Leica Geosystems, AG